.jpeg)

.jpeg)

ChessBase 17 - Mega package - Edition 2024

It is the program of choice for anyone who loves the game and wants to know more about it. Start your personal success story with ChessBase and enjoy the game even more.

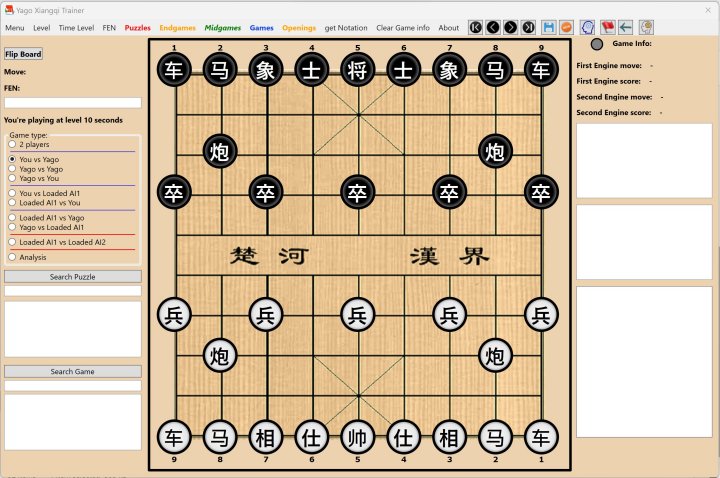

A friend of mine has created an amazing new app for Chinese chess, and he has created his own engine, this app is not commercial or sold anywhere, I'm just testing it.

He is obsessed with finding ways to make his engine more powerful than commercial engines. Personally, I don't think the real need today is to have the strongest engine, because I'm sure that even the 100th in the rating list can wipe the floor with the world champion at any time, but I think the real challenge today is to create an engine that plays like a human being and that can be fun to play against.

I had the weekend off and wanted to play chess, since I wasn't born rich and my money comes from very long hours at work, I checked how much it would cost to get a US Chess membership and play in a tournament. The cost of membership was $45, to play in a local tournament the entry fee was $90.

I thought spending $135 to play 5 games was way too expensive... so while I was considering my options... I saw the advertisement for the New Fritz 19, for only $83 you can create a playchess account, watch videos or play online 24 hours a day! So the choice was simple, I want to play chess and not spend all my money on memberships, tournament entry fees and only play a few games.

Then another thought came to me... I'd like to know my rating, maybe I can use Fritz 19 for that.

In my opinion, rating between people, especially when they are not professionals, doesn't measure much. In most tournaments there are a lot of young kids who have nothing to do but play chess all day long, many of them have expensive coaches paid for by their parents, and they play tournaments every weekend. So when you meet them the result is bad because the kid is often underestimated.

On the other hand, even between adults, there can be tournaments where you do better because you're more relaxed, less tired from work, and tournaments where you do worse because maybe you didn't sleep well in the hotel where the tournament is being played.

One of the things I asked myself was whether I could use Fritz or other Chessbase engines to predict my rating. A couple of years ago, when I was at the top of my chess career, I played extensive blitzes, setting the engine's Elo to 1700-1800-1900 and seeing if there were any different results.

Most of the time the result was 6-0, the engine was always beating me.

Then I noticed that Lichess and also Chess.com started to create bots and I wanted to see if I could beat them. Unfortunately the experiment was cut short because life circumstances changed and Covid definitely put an end to that period.

Now, after a couple of years, I saw the new Fritz 19 engine, with these new characters, and I started playing against them, and the results were interesting. But I didn't have any idea how these new Fritz bots played and at what level.

So I came up with the idea for an experiment, I would let the Fritz-Bots play at different levels against the chess.com bots, and record the results. To have a kind of serious result on the performance, I would make each Fritz-Bot play against the chess.com bot 6 games as White and 6 games as Black.

Chess.com has 134 bots. Fritz has 6 bots with 6 different levels: beginner, hobby player, club player, strong club player, master candidate, grandmaster.

134 bots multiplied by 12 games is over 1600 games just for one level of a Fritz bot, so you can definitely have a lot of fun and see a lot of games!

Still, it's a good amount of data to get an idea of how well Fritz is programmed to play like a human (because if it wins all the games, we can assume it's an engine).

There is also the possibility, thanks to the analysis of the games after they have been played, to understand how a human performance is related to a set of chess skills and chess understanding, which we call rating.

I believe this is more objective than playing in a tournament or online (cheating seems to be a plague online!), because the performance of our computer opponent should always be the same. For example, at beginner level, Fritz might be programmed to win 75% of games against a human player rated around 1800, while a human player rated 1000 would win only 1 in 4 games. Of course these data are only speculations, once the experiment will be finished it will be possible to give a better picture.

In this article I'd like to share the results of a couple of matches, the games were interesting and I'll add some thoughts on how these bots play and how they differ from humans.

The first match was between Deadlost, a bot in chess.com rated 1300,

and the Beagle, also known as: AllRound "beginner level". Here is a pic of him before the match!

The result was interesting, +5 -5 =2, it seems to confirm Deadlost's rating and could give an indication of the Allround Beginner's personality rating.

Here is a picture of the two players during a post-mortem analysis

I'm going to give some highlights of some games, because I found some important differences between human and computer play. The games are commented by Fritz Tactical Analysis 6.4, when and if I add my own comments I will preface them with DN:

Game 1 Allbeginner vs Deadlost – pay attention to move 10 the Fritz-engine based personality plays a very human move as played by a true beginner. Move 23 is brilliant! See if you can find it, or checkout the game!

White has lost all advantage in the endgame. This is a good example of how human beginners play.

Game 5: showed a very peculiar situation. White can give checkmate just playing Bf6. Instead it doesn't play this move, and also tactical analysis doesn't consider it.

Black just took on g2 threatening to promote taking on h1, for the Tactical Analysis the right move to play is Rg1, and Bf6 is not considered. This is typical engine behavior, they are always counting material. But a human knows there is no way to lose the game after Bf6.

Before we look at the next 6 games played by Allround as Black, I'd like to show you the commentary inserted by Deadlost during the game. Once again the aim here is to show that the engine is not human, and these phrases that we can find in this game are repeated over and over again in every game. Perhaps chess.com could add an AI chat like the one used by Whatsapp to make their characters more human.

And now the highlights of the 6 games I played as Black.

Game 9: Please pay attention to this game, because it shows a pattern that we will also see in the next match, which will be played at Grandmaster level. Both engines play human moves in the opening. On move 12 White wins, but the game lasts 84 moves and ends in a draw. On move 19, White makes a move which, in typical human fashion, is a blunder. Black delays to take advantage of it, again in human-like fashion. Once the endgame is reached, the engine is at beginner level and doesn't "know" how to give checkmate with the rook, but some of the king's moves are kind of absurd, because I taught primary school kids and they didn't really play like that, even when they didn't know how to give checkmate. Why is this important? In the next game at GM level we will see the same behaviour from the engine!

Game 10: For the first time I see a machine playing the Grob! This game shows both engines missing key moves again and again, but that's to be expected as they are beginners. On move 56 Black has won the game, he has a queen and a bishop against a naked king, but he doesn't give checkmate, in some cases only a mate in 2 moves, and at the end of this game the constant repetition of the bishop move doesn't make much sense from a human point of view, even at beginner level.

Game 11: is a fast game, which to be honest doesn't make much sense from White's point of view, the chess.com engine makes many absurd moves, especially for a 1300 rated player, giving Black a huge material advantage. In this game we find that the engine is able to see a mate in 4 moves.

Game 12: AllRound beginner's moves 37 and 38 don't make any sense and I don't really see anyone rated around 1300 playing them. Once again we see moves like this in the endgame. But I suspect that both engines were drunk at this point. This game shows a possible contradiction. If in game 10 the Allround beginner didn't know how to checkmate with a queen, how did he learn it 2 games later?

The second match I'd like to share was played between Hikaru, a bot on chess.com with a rating of 2820, who is the copy of Hikaru Nakamura.

AllRound was set at GM level, which could be around 2500-2600, and in this match AllRound had an amazing performance: Allround GM +10 -1 =1, even though the quality of some games is clearly not at GM level. Maybe because of some bugs in the code, Allround didn't play the way it should, which is at GM level for the endgame.

But of course both engines failed at one thing, which every human GM knows: resignation. Humans, especially when they know their opponent is rated 2600-2800, don't really play to checkmate, because it's understood that a GM-level player has learned the most basic checkmate. Instead, when we play against an engine, most of the time they don't give up. Another thing I found strange in the Hikaru bot was the opening repertoire as Black. I know that most top GMs today are universal players. They play a lot more than they did in the 1970s, so they need a repertoire that can surprise the opponent. Instead the Hikaru bot played almost the same opening in all of Black's games. However, I don't know much about Hikaru if his opening choices as Black are limited.

Here is a picture of AllRound studying for the match!

Game 1: There are also some strange things in the analysis. For example, in move 14 the tactical analysis says that Black should have played a different move, which would have given an evaluation where Black is slightly better. If this is true, then 14.Bxg5 should be marked as doubtful or as a bad move, because it gives Black the advantage.

The same can be said for Black's move 19, which is a bad move because it gives White an advantage. Previously we couldn't comment on the quality of the moves because they were beginners, but the GM level is different and you expect the best moves, especially when, as in the case of the chess.com bot, it has an Elo rating of 2820!

Yet there are some amazing moments, like at move 24 in the diagram above, when Fritz-Allround sacs a rook for no apparent reason to a human, but in the end we see why, the bishop pair that the engine manoeuvres like razors! Please keep this game in mind and see how the engine outplays the other bot like a baby!

Game 2: What would you play as White in this position?

This is a nice tactic based on the weaknesses created by Black's moving pawns.

Game 3: With move 66, Allround has won the game. He is a GM level player, I don't know any GM who can't win a chess game and give checkmate with rook and bishop. But in this game it happened.

There was an interesting moment in the game: Black has just played 14...Kh8 to remove the king from a possible discovery check by the Bc4. Now it's White's turn, what would you play? Notice that most training positions are all about tactics, this is not about tactics at all, but a real understanding of the position and what needs to be played.

Of course you have to watch the game to find the answer, but the point here is that the engine can teach us some good moves, because the idea behind this move is to avoid having an isolated pawn on the A-file, which becomes an easy target for the enemy rook.

Game 6: Move 66 once again shows that we are playing against an engine, not a human.

I managed to sneak a picture of Hikaru and AllRound playing!

And now let's have a look at the last 6 games of the match where AllRound plays Black.

Game 7: we see how scary an engine with a bishop pair and 2 central passed pawns can be!

After the defeat in game 7, Allround won all the remaining games, starting with game 8, which ended with a checkmate by a pawn!

Game 9: at move 65 we see an underpromotion that doesn't make sense from a human point of view.

Game 10: once again in the endgame, all round, around move 106, Black begins to play Kh1-h2, which is absurd.

Game 11: Black wins, if he were human he would let the horse be killed while taking control of a promotion square (move 82... Kg2 and it's game over for White), instead Black plays a lot of strange knight moves.

Conclusion: The new Fritz 19 has given me endless hours of fun, thanks to games played against it, as well as games played against other engines and chess bots on the internet. The new Fritz engine has provided many opponents to play against, and it's cheaper than playing in OTB tournaments. There are many more questions I have about the program, because often we are limited in our imagination, and it will be a pleasure to discover the new features that can help me with training and chess improvement. While my initial intention was to use it to determine my rating, I discovered that I could use Fritz 19 to learn and practice a new opening, but that is material for another article!

| Advertising |