Fischer opened almost exclusively with the King's pawn and was quite successful with it. Is there truth to his famous claim that e4 is "best by test"? Now that large databases are widely available, we can test his assertion and answer the question that every chess player has faced: what is the best first move?

A survey of online databases shows that e4 is slightly inferior to d4. The exact percentage scores differ depending on which games are included – for instance, some databases only include grandmaster games, and this can impact the results. However, the overall story is quite consistent, as none of these game collections appear to support Fischer's claim. My own database reflects the same trend.

| Database |

e4 |

d4 |

| My Scid |

53.3% |

55.0% |

| ChessTempo (2200+) |

54.1% |

55.8% |

| Shredder |

54.5% |

56.3% |

| FICS (avg rating 2200) |

52.3% |

52.7% |

| Chess.com (master games) |

54.3% |

56.0% |

| NicBase |

53.9% |

56.1% |

| 365Chess (master games) |

54.7% |

55.8% |

| ChessBase Online |

53.2% |

54.5% |

For a while I paid considerable attention to the percentage score when choosing my openings. But focusing on percentage scores is quite naïve: no one would say that my 56.25% score in the US Open was a better performance than Anand's 35% in the 2013 World Championship. You have to take into account the strength of the opposition, and with all due respect to my opponents, they didn't play at the same level as Carlsen did. A quick check with my database suggests that the opponent's rating could be an important factor in explaining d4's higher score.

On average, Black was rated 6.32 points higher than White in games beginning with 1.e4. For 1.d4, the pattern reversed: now White was 6.78 points higher rated. It isn't hard to imagine why this trend exists. The King's pawn has a reputation for leading to sharper positions, while d4 is considered more positional. It is hard to say if this is true or not, since of course there are many positional e4 openings and tactical d4 openings (e.g., the Berlin variation of the Ruy Lopez and the Botvinnik variation of the Semi-Slav). But what matters is whether players believe that one opening is safer than another. If you are higher rated and playing White, you may prefer to outplay your opponent positionally instead of risking an upset in a tactical slugfest. Hence, you might open with 1.d4 as long as you think that it is the less aggressive choice. Similarly, if you are the underdog and playing White, you may be inclined towards 1.e4, since your opponent could easily blunder when faced with complications.

Here's why these considerations matter: we don't know if d4's higher percentage score is evidence of its superiority, or due to the fact that people prefer 1.d4 when they are better player. As an extreme illustration, it is easy to imagine that a master could score terrifically with 1.Nh3 if he only plays it against 1400-players. He's not winning because 1.Nh3 is a good move – he is winning because he is so much stronger than the opposition.

The ChessTempo.com database hints that this rating gap may fully explain d4's higher score. Here are the statistics for White:

| |

Avg rating |

Performance rating |

| 1.e4 |

2416 |

2454 |

| 1.d4 |

2435 |

2473 |

It is true that White's performance rating with d4 is 19 points higher than his performance with e4. But this is exactly what we would expect given that the average d4 player is rated 19 points above the average e4 player. White outperforms his rating by the exact same amount whether he plays e4 or d4, so there isn't any evidence that either opening is superior. The same pattern is present in my database:

| |

Avg rating |

Performance rating |

| 1.e4 |

2299 |

2327 |

| 1.d4 |

2340 |

2368 |

We can use statistics to test if the rating gap explains the difference in percentage scores. First, categorize all the games into bins based on the rating gap. How well does White score when he plays 1.e4 and is 350 points higher rated? What if he plays d4 instead? Repeat this for when the rating gap is 349, 348, etc., all the way until -350.

Is d4 better than e4?

| Rating Gap |

Percentage Score for White |

|

|

d4

|

e4

|

|

350

|

94.2

|

90.0

|

|

…

|

…

|

…

|

|

150

|

75.0

|

71.7

|

|

149

|

72.0

|

74.6

|

|

148

|

71.2

|

76.6

|

|

147

|

70.6

|

73.7

|

|

146

|

72.6

|

72.3

|

|

…

|

…

|

…

|

|

-349

|

8.3

|

10.3

|

Rating gap = White's rating – Black's rating.

Only games with both players above 2199

were used. Results based on 685,184 games.

Our null hypothesis is that the percentage scores for e4 and d4 are the same in each bin. Now we want to know if the data is consistent with our assumption. To measure this, we ask, "if e4 and d4 really were equally good, how likely is it that we would see results as extreme as the ones that actually occurred?" If this likelihood (called the p-value) is low, that would imply that e4 and d4 are probably not equal. In other words, if the real world outcome is unlikely given our assumption, then our assumption should be rejected. On the other hand, if this likelihood/p-value is high, then we could keep our assumption. It would mean that data similar to what we have seen is quite possible when our assumption is true. Typically, we reject if the p-value is below 5% and fail to reject if it is higher.

So what happens when we test whether e4 and d4 are equal in each rating bin? The p-value is 0.9633, so we are not even close to rejecting. The data is consistent with e4 and d4 being equally good. Thus, once we controlled for the fact that the rating gap is different for e4 and d4, the higher percentage score for d4 vanished.

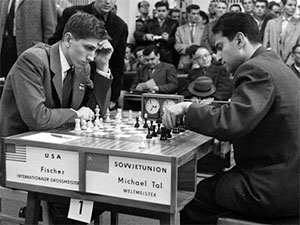

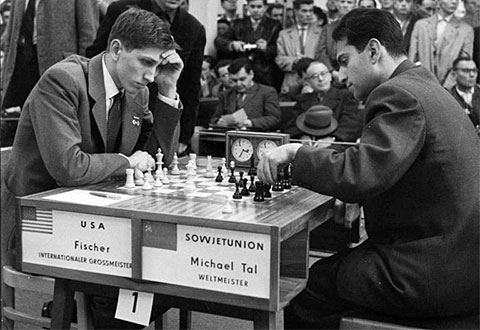

Bobby Fischer swore by 1.e4

To be careful, it should be noted that "we fail to reject that e4 and d4 are equal" is not quite the same thing as "we have proved that e4 and d4 are equal." It is possible that there is a small difference that is real but too tiny to distinguish from luck. For example, one coin might have a 51% chance of heads while another gives a 50% chance, but in 10 tosses it is hard to tell the difference. A statistical test will probably fail to reject that they are equal. However, in a very large number of coin tosses, it does eventually become clear that the coins are not the same – it would be improbable that luck alone causes one coin to consistently be heads more often. Fortunately, in this case there is an abundance of data. There are 1400 categories: 700 for all the rating gaps from -350 to 350, and within each rating bin there is one category for e4 and one category for d4. But databases are so big that even after dividing the data into 1400 bins, there are still hundreds or thousands of games in each bin, with very few exceptions. Thus, any difference between e4 and d4 would have to be extremely small to escape notice from our statistical test.

Both the King's pawn and the Queen's pawn have been very popular first moves; both have been played by many great champions. There isn't any consensus on which is better, and a simple statistical test suggests that they are equally good. A natural and reassuring result.

A minor quibble: usually we prefer models that are simple and have a handful of variables to complicated models with many variables. Instead of having 1400 bins, wouldn't it be nicer if we had one variable to control for ratings and another variable for e4 vs. d4? Then we could do the same job with 2 variables instead of 1400. And Elo's formula for the expected score gives us a ready-made approach for dealing with the rating gap. It would strengthen the case for our conclusion if we got the same result using two different methods. And yet, this seemingly easy task became far more complicated – and far more interesting – than it appeared.

To be continued…